I've been working with IEEE Shareable Competency Definitions (SCDs) and xAPI for evidence-based competency assertion, and I keep hitting the same challenge: there's a gap between the definition of a competency (especially one with a rubric) and the operational rules for asserting that someone has achieved it based on real xAPI evidence.

IEEE SCDs do a great job defining competencies and their rubric structures—they tell us WHAT we're assessing. xAPI profiles define how to capture learning evidence as statements—they give us reusable patterns for HOW activities generate evidence. But what's missing is the connective tissue: how do we map the achievement of specific rubric items or levels to patterns of xAPI statements in an LRS?

This becomes especially challenging when:

xAPI Spec: RAP is fully compatible with xAPI. When profiles exist, RAP references them. When they don't, RAP provides a practical path forward that can later contribute to formal profile development.

JSON-LD: RAP uses JSON-LD for portability, semantic linking, and alignment with both IEEE and xAPI ecosystem practices.

The key innovation is separation of concerns:

Pointers to existing work: If someone has already solved this problem, I'd love to learn about it rather than reinvent wheels.

Use case validation: Does this address real problems you've encountered, or am I solving a problem that doesn't exist?

Technical feedback: Especially around the JSON-LD structure, multi-registration handling, and the event-driven assertion model.

Collaboration opportunities: If this resonates with your work, let's talk about how we might work together.

I'm fully prepared to be told this already exists, that I'm approaching it wrong, or that there's a better way. That's why I'm here—to learn and, hopefully, to contribute something useful to the community.

Looking forward to your thoughts!

IEEE SCDs do a great job defining competencies and their rubric structures—they tell us WHAT we're assessing. xAPI profiles define how to capture learning evidence as statements—they give us reusable patterns for HOW activities generate evidence. But what's missing is the connective tissue: how do we map the achievement of specific rubric items or levels to patterns of xAPI statements in an LRS?

This becomes especially challenging when:

- xAPI profiles for a domain don't exist yet or are incomplete

- Evidence spans multiple learning experiences (different registrations)

- We need granular progress indicators, not just binary "achieved/not achieved"

- We want to prototype competency assertion quickly without waiting for formal profile development

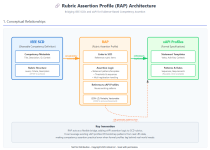

The Proposed Solution: Rubric Assertion Profile (RAP)

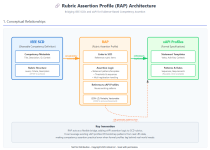

I'm proposing a new JSON-LD data model called a Rubric Assertion Profile (RAP) that acts as a bridge between SCDs and xAPI evidence streams. Think of it as "ornamenting" or extending SCD rubric items with xAPI-specific assertion logic.What's in a RAP?

A RAP is a portable JSON-LD file that contains:- Links to SCD rubric items - References to specific competency definitions and their rubric structure

- Assertion rules - Logic for evaluating xAPI evidence:

- Statement templates and patterns (can reference existing xAPI profiles)

- Thresholds (e.g., "completed 5 of 7 activities")

- Sequences (e.g., "must complete A before attempting B")

- Multi-registration handling (evidence across multiple courses/experiences)

- References to xAPI profiles - When formal profiles exist, RAP leverages them; when they don't, RAP can bootstrap patterns from actual LRS data

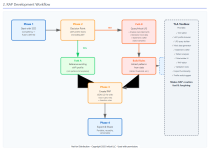

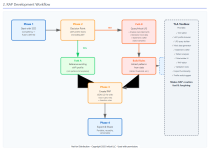

How It Works in Practice

Development workflow:- Start with an SCD that includes a rubric

- Check if relevant xAPI profiles exist:

- If YES: Link to existing statement templates and patterns

- If NO: Use your query tools or TLA Toolbox to query/mock an LRS, analyze statements, and infer patterns

- Use the statement crafter to inject sample statements and test assertion logic

- Build assertion rules in the RAP editor

- Export as a portable JSON-LD file

- Use Audit/Suggest feature to propose additions to existing profiles or new profile creation

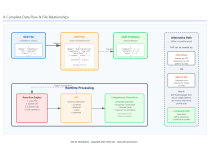

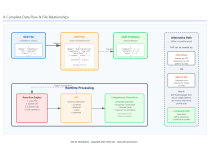

- Learning activities emit xAPI statements to an LRS

- Assertion engine loads relevant RAP(s)

- Engine evaluates evidence against RAP rules

- Emits "progressed" statements when partial thresholds are met (event-driven, reduces load)

- Emits final "achieved"/"mastered" statement when all rubric criteria are satisfied

- All assertions link back to the evidence trail

How This Fits With Existing Specs

IEEE SCD Spec: RAP builds on SCDs without modifying them. It references SCD competency and rubric IDs, adding operational assertion logic as a separate concern.xAPI Spec: RAP is fully compatible with xAPI. When profiles exist, RAP references them. When they don't, RAP provides a practical path forward that can later contribute to formal profile development.

JSON-LD: RAP uses JSON-LD for portability, semantic linking, and alignment with both IEEE and xAPI ecosystem practices.

The key innovation is separation of concerns:

- SCD = WHAT (competency definition + rubric structure)

- RAP = HOW (assertion rules + evidence evaluation)

- xAPI Profile = Reusable patterns (when available)

Why I'm Sharing This

I'm building this into the TLA Toolbox platform, but before I get too far down the path, I want to:- Get feedback - Does this approach make sense? Are there obvious problems I'm missing?

- Learn from the community - Am I reinventing something that already exists? Are there existing specs or approaches that already solve this problem?

- Invite collaboration - If this resonates with others facing similar challenges, I'd love to work together on refining the model

- Contribute back - The goal is to create something that can inform future standards work, not to create a proprietary silo

Open Questions I'm Wrestling With

- Should RAP be proposed as an extension to the IEEE SCD spec, or remain a separate but linked specification?

- How should we handle versioning when both SCDs and xAPI profiles evolve?

- What's the right level of expressiveness for assertion rules? (Too simple = not useful; too complex = nobody uses it)

- How do we balance the need for quick prototyping with the rigor needed for formal standards?

- Are there existing competency assertion engines that already do something similar?

What I'm Looking For

Constructive criticism: Tell me what's wrong with this approach. What am I missing?Pointers to existing work: If someone has already solved this problem, I'd love to learn about it rather than reinvent wheels.

Use case validation: Does this address real problems you've encountered, or am I solving a problem that doesn't exist?

Technical feedback: Especially around the JSON-LD structure, multi-registration handling, and the event-driven assertion model.

Collaboration opportunities: If this resonates with your work, let's talk about how we might work together.

Diagrams

I've created a set of architectural diagrams showing:- Conceptual relationships between SCD, RAP, and xAPI profiles

- RAP development workflow (with both "use existing profile" and "bootstrap from data" paths)

- Runtime competency assertion process

- Complete data flow and file relationships

Bottom Line

I'm trying to make evidence-based competency assertion practical today while staying aligned with standards and creating a pathway to contribute back to formal specifications. RAP is my attempt to bridge the gap between competency definitions and xAPI evidence in a way that's flexible, portable, and standards-friendly.I'm fully prepared to be told this already exists, that I'm approaching it wrong, or that there's a better way. That's why I'm here—to learn and, hopefully, to contribute something useful to the community.

Looking forward to your thoughts!