The Problem I'm Trying to Solve

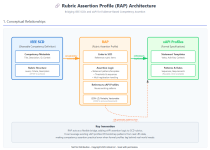

I've been working with IEEE Shareable Competency Definitions (SCDs) and xAPI for evidence-based competency assertion, and I keep hitting the same challenge: there's a gap between the definition of a competency (especially one with a rubric) and the operational rules for asserting that someone has achieved it based on real xAPI evidence.The TLA standards ecosystem now has strong coverage of three concerns. IEEE 1484.20.3-2022 (SCD) defines competencies and their rubric structures—it tells us WHAT we're assessing and what proficiency looks like. IEEE 2881-2025 (LMT) describes learning resources and events and formally links them to competencies via

teaches and assesses properties—it tells us WHERE competencies attach to learning content. xAPI profiles define how to capture learning evidence as statements—they give us reusable patterns for HOW activities generate evidence.But what's missing is the fourth concern: WHEN and under what conditions do we assert that someone has achieved a specific rubric criterion level, based on patterns of xAPI statements in an LRS?

This becomes especially challenging when:

- xAPI profiles for a domain don't exist yet or are incomplete

- Evidence spans multiple learning experiences (different registrations)

- We need granular progress indicators, not just binary "achieved/not achieved"

- We want to prototype competency assertion quickly without waiting for formal profile development

The Proposed Solution: Rubric Assertion Profile (RAP)

I'm proposing a new JSON-LD data model called a Rubric Assertion Profile (RAP) that acts as a bridge between SCD rubric structures and xAPI evidence streams. Think of it as augmenting SCD rubric items with xAPI-specific assertion logic, without modifying the SCD definitions themselves.RAP is to competency assertion engines what an xAPI Profile is to xAPI statement generation: a declarative configuration that tells the engine what to do.

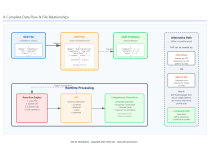

What's in a RAP?

A RAP is a portable JSON-LD file that contains:- References to SCD rubric components by URI— Specifically:

scd:CompetencyDefinitioninstances (the competency being assessed)scd:RubricCriterioninstances (the evaluative dimensions—e.g., "Safe Handling Procedure")scd:RubricCriterionLevelinstances (the proficiency thresholds—e.g., "Proficient" with score 3)

These reference the same globally unique URIs/IRIs that the SCD standard requires, ensuring RAP consumes the standard's own identifiers rather than inventing a parallel addressing scheme.

- Assertion rules— Logic for evaluating xAPI evidence:

- Statement templates and patterns (can reference existing xAPI profiles)

- Thresholds (e.g., "completed 5 of 7 activities")

- Sequences (e.g., "must complete A before attempting B")

- Multi-registration handling (evidence across multiple courses/experiences)

- Confidence calculation methods (how scores, completion counts, and qualitative evidence combine into a confidence value)

- References to xAPI profiles — When formal profiles exist, RAP leverages their statement templates and patterns. When they don't, RAP can bootstrap patterns from actual LRS data, with a pathway to contribute those patterns back to formal profile development.

- References to LMT metadata (optional) — When operating within a TLA ecosystem, RAP can reference IEEE 2881-2025 metadata in the Experience Index (XI) to discover which CompetencyDefinitions are relevant for a given learning activity, rather than duplicating that activity-to-competency mapping internally.

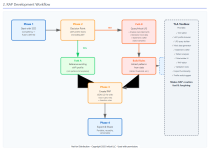

How It Works in Practice

Development workflow:- Start with an SCD that includes a rubric (CompetencyFramework → CompetencyDefinition → RubricCriterion → RubricCriterionLevel)

- Check if relevant xAPI profiles exist:

- If YES: Link to existing statement templates and patterns

- If NO: Use TLA Toolbox to query/mock an LRS, analyze statements, and infer patterns

- Use the statement crafter to inject sample statements and test assertion logic

- Build assertion rules in the RAP editor, binding each rule to specific

scd:RubricCriterionandscd:RubricCriterionLevelURIs - Export as a portable JSON-LD file

- Use Audit/Suggest feature to propose additions to existing xAPI profiles or new profile creation

- Learning activities emit xAPI statements to an LRS

- Assertion engine loads relevant RAP(s)

- Engine evaluates evidence against RAP rules

- Emits "progressed" xAPI statements when partial thresholds are met (event-driven, reduces polling load). These statements reference the

scd:RubricCriterionLevelURI to indicate which level was reached, using verbs from the ADL vocabulary (e.g.,progressed) or a RAP-defined extension vocabulary. - Emits final "achieved"/"mastered" statement when all rubric criteria are satisfied at or above the mastery threshold defined by the CompetencyDefinition's

competencyLevelproperty - All assertions link back to the evidence trail via xAPI statement references

How This Fits With Existing Specs

IEEE SCD (1484.20.3-2022): RAP builds on SCDs without modifying them. It references CompetencyDefinition, RubricCriterion, and RubricCriterionLevel instances by their URIs, adding operational assertion logic as a separate concern. The SCD standard defines the rubric structure and mastery thresholds; RAP defines the rules for determining when those thresholds have been met based on evidence.IEEE LMT (2881-2025): The LMT standard's

teaches and assesses properties (defined in the LRMI namespace, with scd:CompetencyDefinition as rangeIncludes) establish which competencies are associated with which learning activities. RAP can consume this mapping from the Experience Index rather than requiring each RAP file to redeclare activity-to-competency relationships. When 2881 metadata is available, RAP focuses purely on evidence evaluation; when it isn't, RAP can include its own competency mappings as a fallback.xAPI Spec: RAP is fully compatible with xAPI. When profiles exist, RAP references their statement templates and patterns. When they don't, RAP provides a practical path forward that can later contribute to formal profile development. RAP's output (progress and assertion statements) follows xAPI conventions.

CASS: RAP complements CASS rather than replacing it. CASS provides the assertion processing engine and the assertion storage/query infrastructure. RAP provides the declarative configuration that tells CASS (or any assertion engine) when to create assertions, how to calculate confidence, and which SCD rubric components to evaluate. Think of RAP as the configuration file that an assertion processor like CASS loads to know what to do.

JSON-LD: RAP uses JSON-LD for portability, semantic linking, and alignment with both IEEE and xAPI ecosystem practices. Both IEEE standards (SCD and LMT) use RDF-based models with URI/IRI identification; RAP's use of JSON-LD ensures its references to those URIs are machine-resolvable.

The Four Concerns

The key design principle is separation of concerns across the standards ecosystem:| Concern | Standard/Spec | What It Answers |

|---|---|---|

| WHAT competencies exist and what proficiency looks like | IEEE 1484.20.3 (SCD) | CompetencyDefinition + Rubric + RubricCriterionLevel |

| WHERE competencies attach to learning content | IEEE 2881-2025 (LMT) | LearningResource/Event teaches/assesses CompetencyDefinition |

| HOW evidence is captured | xAPI Profile | Statement templates, patterns, verb vocabulary |

| WHEN to assert achievement | RAP (proposed) | Assertion rules, thresholds, confidence calculation |

No existing standard covers the fourth concern declaratively. RAP aims to fill that gap.

Diagrams

I've created a set of architectural diagrams showing:- Conceptual relationships between SCD, RAP, LMT, and xAPI profiles

- RAP development workflow (with both "use existing profile" and "bootstrap from data" paths)

- Runtime competency assertion process

- Complete data flow and file relationships